AI Overview Optimization & SEO: How to Rank in Google AI Overviews

What Are AI Overviews?

Google's AI Overviews, sometimes called "generative summaries" or "AI-generated summaries", are blocks of text that appear at the very top of search engine results pages (SERPs).

Instead of merely listing links and snippets, Google's AI Overviews synthesize information from the top-ranking pages to provide users with an immediate, comprehensive answer to their query.

In practice, when a user types a question such as "how to optimize content for AI Overview," Google's algorithm will:

- Retrieve the top N relevant results,

- Feed that content into a large language model (LLM, as gemini or chatgpt) trained on ranking and summarization, and

- Output a concise summary that combines the most relevant pieces of information. The result is an overview designed to answer the question directly.

In this post, we'll walk through how to optimize your content so it not only ranks organically but also appears inside that AI Overview position zero.

We'll explain with a real life experiment how this methodology works, demonstrating through empirical data that it can yield articles up to 10% more likely to be used in the AI Overview and, on average, achieve around 50% more citations than the top-performing page.

Why Are AI Overviews Important for SEO and How Do They Impact?

AI Overviews are fundamentally reshaping the SEO landscape, altering how users discover information and how businesses need to approach online visibility. They impact SEO by:

Impact on click-through rates: While AI Overviews can increase visibility for cited sources, they may also lead to decreased click-through rates for pages not directly cited in the overview.

Visibility at "Position Zero": Even if your page ranks outside the top 3 organically, being featured in an AI Overview delivers tremendous brand exposure, users see your information first, often before any clickable results.

Traffic Diversion vs. Brand Recognition: Although some users will read the AI Overview and move on without clicking, being cited in that overview can create subconscious trust. If your brand or domain is mentioned (e.g., "according to Forbes" or "in a report by Company X"), that mention can prompt users to remember your name when returning to purchase.

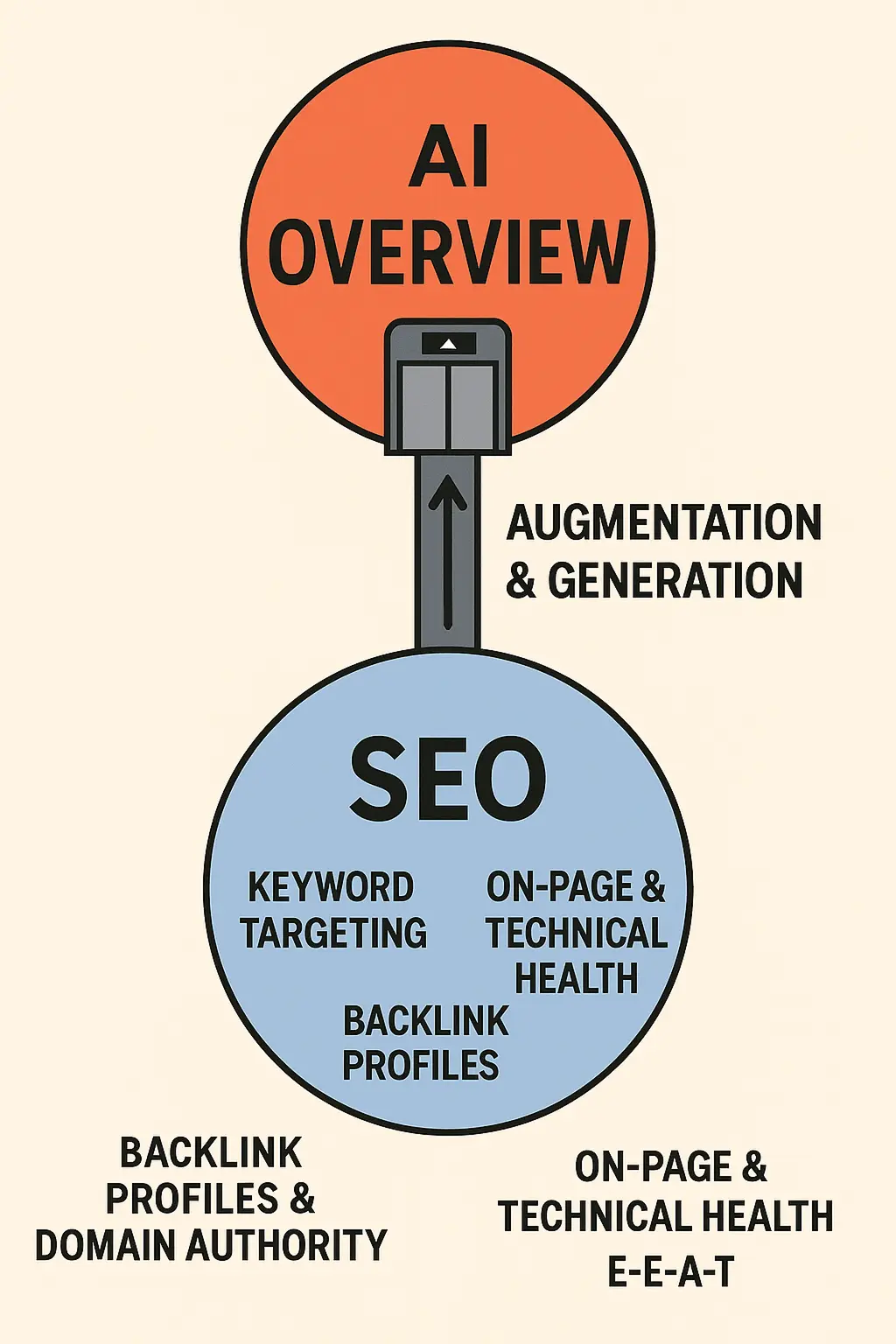

AI Overview SEO vs. Traditional SEO: Core Overlap and the "Extra Step"

Traditional SEO emphasizes elements such as:

- Keyword Targeting: Researching and placing relevant keywords (e.g., "optimize for AI Overview," "rank in Google AI Overview") in titles, headings, and body copy.

- Backlink Profiles & Domain Authority: Earning high-quality inbound links from reputable sites and building overall site trust to rank higher in the organic listings.

- On-Page & Technical Health: Ensuring fast load times, mobile-friendliness, correct canonical tags, and a crawlable site structure.

- E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness): Showcasing author credentials, citing reputable sources, and maintaining a strong brand presence.

All of these components are relevant to AI Overview SEO, since, as we will see below, Google's AI-powered retrieval stage still relies on classic ranking signals (keyword relevance, backlinks, domain strength, page speed, etc.) to select the top n pages for potential summarization.

But there is an extra step beyond ranking: once you appear in the top n organic positions, you must also ensure your content is formatted and structured in a way that Google's generative model can directly consume and summarize it to actually appearing inside the AI Overview.

The RAG Model Lens

Google's AI Overviews are built on a RAG (Retrieval-Augmented Generation) framework:

- Retrieval Stage (R): Google's systems select the top n pages (often n = 50) based on traditional SEO ranking signals (classic PageRank, content relevance, domain authority).

- Augmentation Stage (A): The LLM ingests extracted passages or chunks from those top n pages, often via "dense embedding retrieval," where the model matches query embeddings to content embeddings.

- Generation Stage (G): The LLM synthesizes a coherent, human-readable summary by fusing the retrieved passages, while ensuring factual consistency and fluency.

From an SEO standpoint, passing the Retrieval stage still requires traditional tactics, strong backlinks, keyword relevance, and solid technical health. But to succeed in the Augmentation & Generation stage, your content must follow the AI Overview's expected format. Without that structure, the model can't extract the right "chunk," and you won't appear in the AI Overview, even if you rank organically.

Technical AI Overview Optimization: Experimental results

Below, we dive into a real-world experiment we conducted using our in-house Gemini-powered RAG model, specifically built for testing AI Overview inclusion. We indexed 50 curated pages: a mix of long-form articles, listicles, how-to guides, and one post specifically generated to optimize for AI Overview inclusion.

Our goal is to see how both content structure and domain signals affect whether Google's AI Overview would pull from a page and how often.

In a nutshell, our experiment reveals a crucial pattern: AI Overviews generated using RAG models tend to follow a consistent content structure. More importantly, by creating an article that meticulously mirrors this structure, we can substantially increase its chances of appearing in the AI Overview.

Experiment Setup

- Targeted Keyword: The core query used for testing was "AI-Driven Analytics SaaS".

- In-House RAG Model: We developed a custom RAG (Retrieval-Augmented Generation) pipeline for experimentation, replicating the retrieval and summarization logic of a typical AI Overview.

- Corpus of 50 Pages: Long-form research pieces, listicles with clear subheads, step-by-step how-to guides.

- One flagship article: An AI-generated page, specifically engineered to maximize its performance against our RAG model's internal metrics, aiming for its selection and inclusion in the AI Overview.

AI Oveview optimization: Key Metrics Tracked

- Inclusion Score (0 to 1): This is a metric provided by RAG models and represents the probability that at least one passage from a page is retrieved and used in the AI Overview's final summary. In plain English, a score of 0.643 means there is roughly a 64 percent chance that some part of that page would end up in the generated overview.

- Citation Count: The total number of times a page's content is explicitly quoted or paraphrased in the AI Overview. For example, a count of 10.2 indicates that, on average across multiple runs, the model cited the page about 10 times.

Note on Metrics: Inclusion Score and Citation Count are not strongly correlated. A page might have a high Inclusion Score, meaning it almost always contributes at least one passage, yet receive relatively few total citations if each time it only contributes a single sentence. Conversely, a page with a moderate Inclusion Score could be heavily quoted (high Citation Count) in the few iterations where it is selected. In other words, scoring well for "getting picked at least once" doesn't guarantee many passages will be woven into the overview, and vice versa.

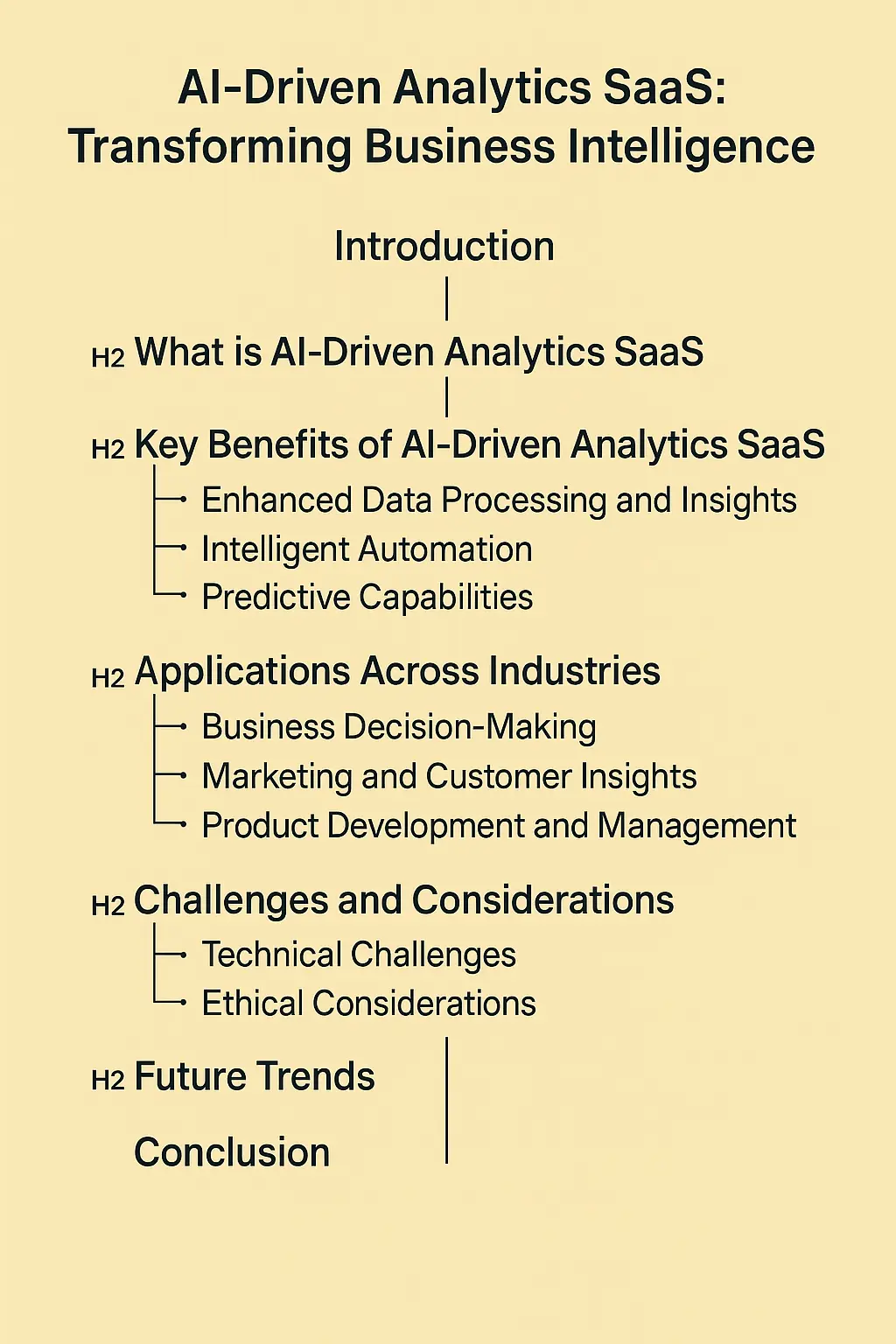

The experiment: AI Overview Response Structure for "AI-Driven Analytics SaaS"

When querying our RAG model with the keyword "AI-Driven Analytics SaaS" and analyzing its responses based on a general corpus of web articles (simulating a real web search scenario and before we introduced our own specifically optimized content), we observed that while individual responses exhibited natural variations, the generative summaries generally followed this core hierarchy on average:

Think of this as the inherent "blueprint" an AI Overview tends to use. If your content naturally aligns with this format and addresses the underlying question phrasing, its chances of being extracted and summarized increase significantly.

Internally for the model to construct the answer, it is ranking all the content from all the posts used and deciding which one to pull. Below is the top results subset showing Max Inclusion Score and Average Citation Count for the highest-performing pages.

| Title/URL | Max Inclusion Score | Avg. Citation Count |

|---|---|---|

| What is AI-Driven Analytics? Pros, Cons and Use Cases | 0.643 | 10.2 |

| AI-Driven Analytics | 0.697 | 1.8 |

| AI-Driven Insights in SaaS Product Management | 0.641 | 1.8 |

| AI in SaaS: How AI is Transforming the Software Industry | 0.639 | 0.8 |

| AI for SaaS analytics | 0.702 | 0.6 |

| How AI is transforming the SaaS landscape | 0.642 | 0.2 |

| Top 10 AI Development Trends Shaping the Future of SaaS | 0.623 | 0.2 |

Column Explanations:

- Max Inclusion Score: Since a single page can have multiple relevant passages, we report the highest observed score (0 – 1) among all its passages. A score of 0.702 means that at least one passage had a 70.2 percent chance of being used in the summary.

- Avg. Citation Count: The average number of times that page's content is cited or paraphrased across multiple runs. For example, 10.2 means that, on average, the model cited the page about 10 times per run.

This means that for a query related to "AI-Driven Analytics SaaS" the AI Overview would likely cite the article "What is AI-Driven Analytics? Pros, Cons and Use Cases" an average of 10.2 times, contributing to the typical response structure previously identified as the "blueprint." Furthermore, some content from that page has approximately a 64.3% probability of being included in the final AI Overview.

Note: The total number of citations can vary significantly from one RAG model to another. Our internal model is configured to be more generous with citations than some others for thorough testing purposes.

Introducing a Generated Text with the Same Structure & Blueprint

To specifically test the impact of aligning content with the AI Overview's preferred structure, we created an AI-generated version of our AI-Driven Analytics SaaS article. This new version was engineered to meticulously follow the RAG-friendly blueprint previously discussed: it used H2/H3 headings that mirrored the blueprint's questions and featured content that directly answered them. We then fed this optimized post to our in-house RAG model, processing it multiple times alongside the original 50 pages to observe its performance:

- Max Inclusion Score: ≈ 0.754

- Avg. Citation Count: ≈ 15.4

Because this generated version perfectly matched both the structure and provided content in the expected style, it outperformed even the highest-scoring pages from our initial corpus (those listed in the table above).

Our AI-generated text is approximately 10% more likely to be used in the AI Overview and achieved, on average, around 50% more citations than the top-performing page from our initial corpus.

It's worth noting that this core finding, the significant performance boost for content structurally aligned with the AI Overview's preferred format, is not isolated. Similar patterns of improved inclusion and citation were consistently observed in other internal experiments we conducted, even when testing with different keyword sets and content combinations.

How does this compare to AI Overviews in real life?

It's important to note that while our AI-generated text excelled in the RAG model due to its perfect structural alignment, in a real-world scenario, it might initially struggle in the crucial retrieval phase of search. This is because it could lack key SEO strengths such as established authority, comprehensive content depth, or a strong backlink profile, which are vital for achieving a high organic ranking in the first place.

However, this experiment highlights a critical point: Once a page clears the initial organic ranking (traditional SEO), the RAG model's behavior in selecting content for the AI Overview is heavily influenced by structure and question-answer alignment. These become decisive factors in securing passages for the AI Overview.

Conclusion

Through our work, we've defined a clear, repeatable method for AI Overview Optimization that complements traditional SEO to secure "position zero" in Google's AI-generated summaries. The key pillars are:

- Traditional SEO Foundation: Rank within the top n organic positions by targeting relevant keywords, building high-quality backlinks, ensuring fast load times and mobile-friendliness, and demonstrating E-E-A-T through expert authorship and reputable citations.

- AI Overview Blueprint: Mirror the AI Overview's structure exactly, use H2/H3 headings that replicate the questions the model asks. Under each heading, provide concise, direct answers so the AI can easily identify and extract those "chunks."

- Content Alignment: Beyond formatting, ensure every paragraph and bullet under those headings precisely addresses the AI Overview's expected questions. This tight alignment between the model's phrasing and your answer style is critical to being chosen for summarization.

By embedding this combined approach into your editorial workflows, making sure every page not only ranks well but also "speaks" the AI Overview's language, you position yourself to consistently influence which passages are pulled into Google's generative summaries.

Future of AI Overview SEO

The SEO landscape is entering a transformative era driven by generative AI. As commented on our post GEO (generative engine optimization), this will get more and more relevant, bringing big shifts in the industry:

- Declining Click Volume from LLM Answers: Early "hallucination traffic" (users clicking the source to verify facts) will diminish as LLMs improve factual accuracy. As chatbots become more accurate, users will get answers directly in the chat interface, further reducing "extra reading" and click-through to source pages.

- AI Overview Citations as the Primary Visibility Metric: Rather than raw site visits, success will be measured by how often AI Overviews pull passages from your site, each instance counts as a citation.

- Brand Mentions Fuel Authority Even Without Citations: Even if your text isn't quoted verbatim, an AI Overview may still name your brand, signaling the LLM's trust in your site. Citations mean your content is used; mentions mean your brand is trusted, both boost awareness and later user engagement.

Curious how your own content stacks up for AI Overviews? To see if your pages are well-optimized to appear in these generative summaries and understand your potential AI Overview score, reach out to us at info@sellm.io for a personalized analysis.

Frequently Asked Questions

What are Google AI Overviews?

Google AI Overviews are AI-generated summaries that appear at the top of search results, synthesizing information from multiple sources to provide comprehensive answers to user queries. They represent a shift from traditional link-based results to direct, conversational responses that aim to answer questions immediately without requiring clicks to source websites.

How do I optimize content for AI Overviews?

To optimize for AI Overviews, focus on creating well-structured, authoritative content with clear headings, direct answers to common questions, proper schema markup, and comprehensive coverage of topics. Ensure your content ranks well organically first, then optimize for AI consumption through clear formatting and factual accuracy. Mirror the AI Overview's preferred structure and use headings that match the questions AI models ask.

Do AI Overviews reduce website traffic?

While AI Overviews may reduce some click-through traffic, they significantly increase brand visibility and authority. Being cited in AI Overviews can build trust and brand recognition, often leading to increased traffic from users seeking more detailed information or making purchasing decisions. The key is viewing citations and brand mentions as valuable visibility metrics beyond traditional click-through rates.

What content performs best in AI Overviews?

Content that performs well in AI Overviews is typically comprehensive, well-structured, factually accurate, and directly answers user questions. It should have clear headings, use authoritative sources, include relevant data and statistics, and be optimized for featured snippets. Content that follows the AI Overview's preferred blueprint structure has up to 10% higher inclusion rates.

How does AI Overview optimization differ from traditional SEO?

AI Overview optimization builds on traditional SEO fundamentals but adds an extra layer: optimizing for AI consumption. While traditional SEO focuses on ranking factors, AI Overview optimization also requires content to be easily digestible by AI models through clear structure, direct answers, and comprehensive topic coverage. It's about ranking first, then being selected for AI summarization.